So, I was listening to an NPR show titled "Digging into Facebook's File on You". At some point, there was some casual discussion of laws that some countries in the European Union have re. users' ability to review and correct mistakes in data that is stored about them. This made me realize that ORES needs a good mechanism for you to review how the system classifies you and your stuff.

As soon as I realized this, I wrote up a ticket describing my rough thoughts on what a "refutation" system would look like. From https://phabricator.wikimedia.org/T148700:

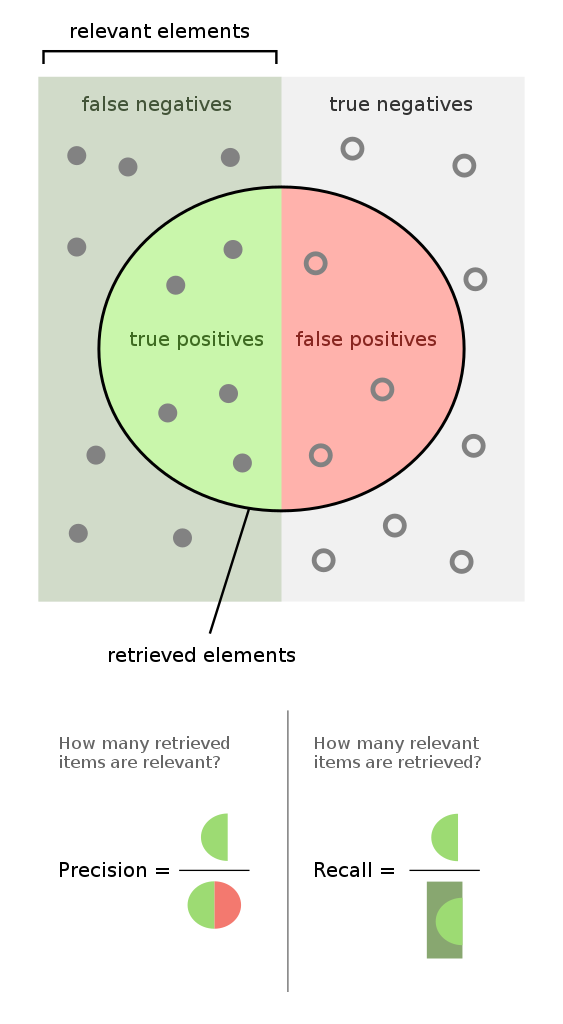

Build a user-friendly UI for reviewing how ORES has classified you or any query-able slice of activity.On one hand, I'm excited by this idea because I think it will be a very interesting exploration into what kinds of feedback people want to give to ORES' classifications. I think it will provide a good means for humans to disagree with the machine in ways that are effective. It's important that we don't hide false-positive/negative reports somewhere that no one will ever see. It would be better if such reports were available as part of a query result -- so that ORES decision can be flagged and directly challenged by a human.

Include UI & API to refute classifications endpoints (via OAuth).

Include UI to curate "refutations" (suppress & meta-review).

Notes:

- Refutations need freeform text. Freeform text *must* have a suppression system.

- We should include refutations in ORES query results.

- We can borrow from MediaWiki's global user rights (ORES is global) for curation privs.

On the other hand, I'm a little bit embarrassed that this wasn't part of the plan all along. I guess in a way, I expected the wiki to fill this infrastructure -- of critiquing ORES. But critiques on the wiki are hidden from the API that tools use to consume score and relegating them to a less effectual class of data. I feel a little bit stupid that it never occurred to me that humans should be able to affect ORES' output.

Now to find the resources to construct this Meta-ORES system...